This post is part of the series “Finding Next-Gen“.

Just got back from GDC. Had a great time showcasing the hard work we’ve been up to at SEED. In case you missed it, we did two presentations on real-time raytracing:

DirectX Raytracing Announcement (Microsoft) and Shiny Pixels and Beyond: Real-Time Raytracing at SEED (NVIDIA)

DirectX Raytracing Announcement (Microsoft) and Shiny Pixels and Beyond: Real-Time Raytracing at SEED (NVIDIA)

In case you were at GDC and saw the presentation, you can skip directly here.

During the first session Matt Sandy from Microsoft announced DirectX Raytracing (DXR). He went into great detail over the API changes, and showed how DirectX 12 has evolved to support raytracing. We then followed with our own presentations, where we showcased Project PICA PICA, a real-time raytracing experiment featuring a mini-game for self-learning AI agents in a procedurally-assembled world. The team has worked super hard on this demo, and the results really show it! 🙂

PICA PICA is powered by DXR.

DirectX Raytracing?

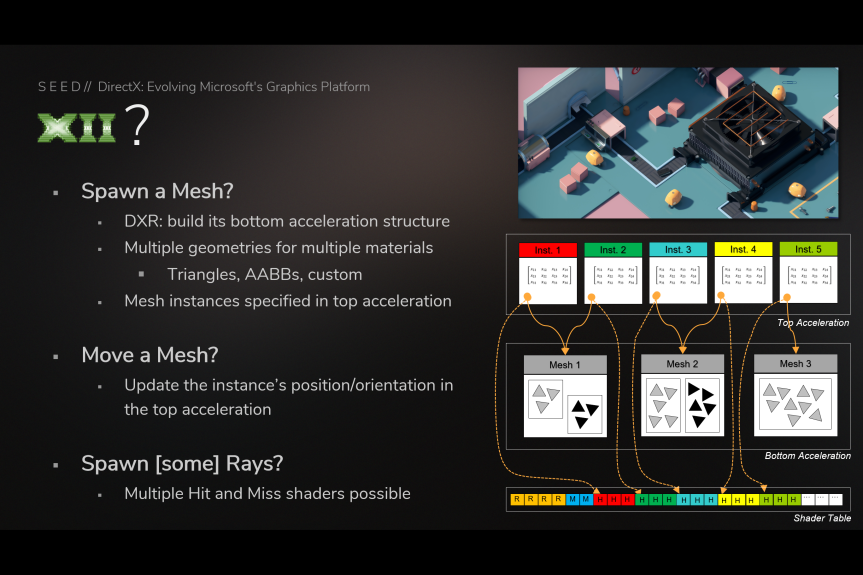

The addition of raytracing to DirectX 12 is exposed via simple concepts: acceleration structures (bottom & top), new shader types (ray-generation, closest-hit, any-hit, and miss), new HLSL types and intrinsics, commandlist-level DispatchRays(…) and a raytracing pipeline state. You can read more about it here.

Taken from our presentation, here’s a brief overview of how this works in PICA PICA:

Using Bottom/top acceleration structures and shader table (from GDC slides)

Using Bottom/top acceleration structures and shader table (from GDC slides)

Ray Generation Shadow – HLSL Pseudo Code – Does Not Compile (from GDC slides)

Ray Generation Shadow – HLSL Pseudo Code – Does Not Compile (from GDC slides)

While you don’t necessarily need to use DXR to do real-time raytracing on current GPUs (see Sebastian Aaltonen’s Claybook rendering presentation), it’s a flexible new tool in the toolbox. From the code above, you benefit from the fact that it’s unified with the rest of DirectX 12. DXR relies on well known HLSL functionality and types, allowing you to share code between rasterization, compute and raytracing. More than just raytracing, DXR also allows to solve more sparse and incoherent problems that you can’t easily solve with rasterization and compute. It’s also a centralized implementation for hardware vendors to optimize, and now becomes common language for every developer that wants to do raytracing in DirectX 12. It’s not perfect, but it’s a good start and it works well.

Presentation Retrospective

During the presentation we talked about our hybrid rendering pipeline where rasterization, compute and raytracing work together:

PICA PICA’s Hybrid Rendering Pipeline (from GDC slides)

PICA PICA’s Hybrid Rendering Pipeline (from GDC slides)

Our hybrid approach allows us to solve, develop and apply several interesting techniques and algorithms that rely on rasterization, compute or raytracing while balancing quality and performance. This shows the flexibility of the API, where one is free to choose a specific pipeline to solve a specific problem. Again since raytracing is another tool in the toolbox, it can be used where it makes sense and doesn’t prevent you from using other available pipelines.

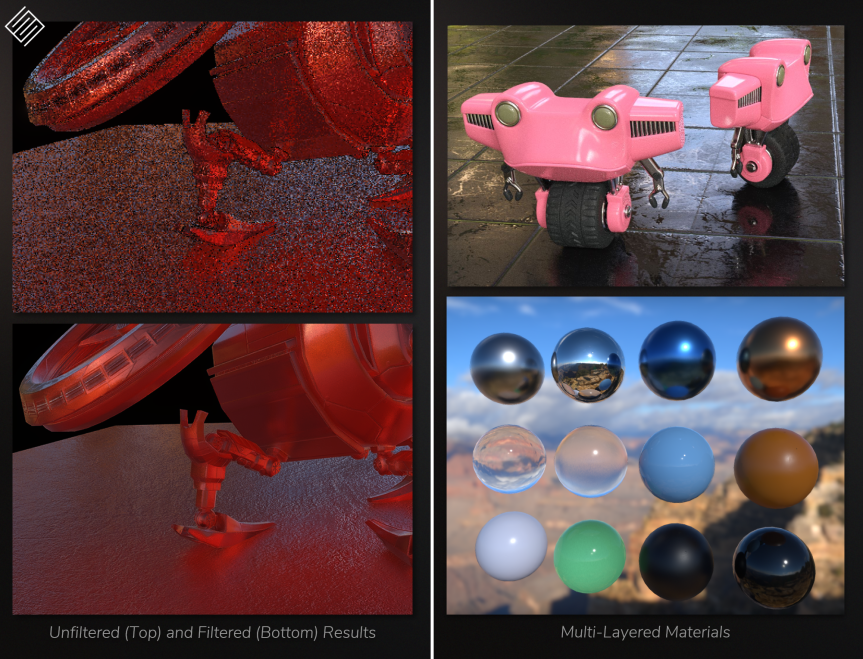

First we talked about how we raytrace reflections from the G-Buffer at half resolution, reconstruct at full resolution, and how it allows us to handle varying levels of roughness. We also presented our multi-layer material system, shared between rasterization, compute and raytracing.

Raytraced Reflections (left) and Multi-Layer Materials (right) (from GDC slides)

Raytraced Reflections (left) and Multi-Layer Materials (right) (from GDC slides)

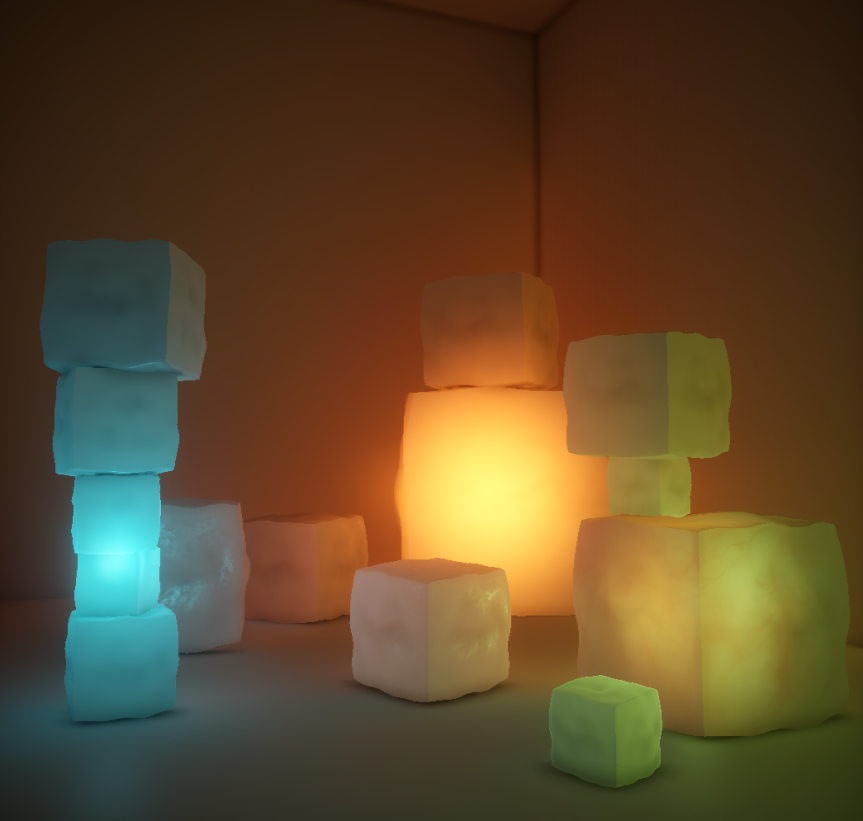

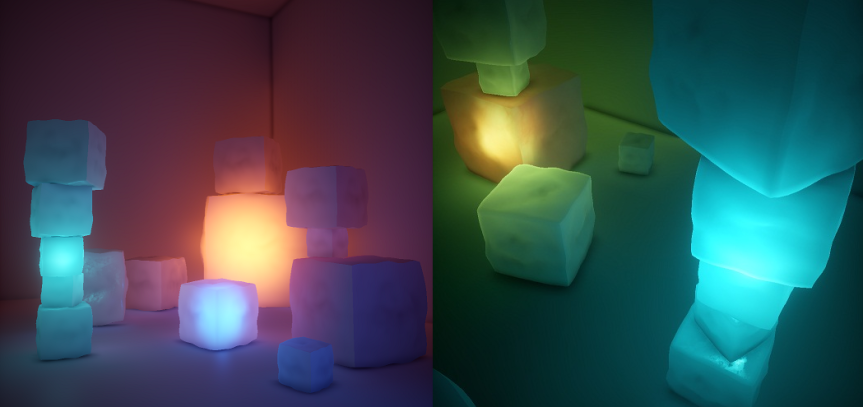

We then followed by describing a novel texture-space approach for order-independent transparency, translucency and subsurface scattering:

Glass and Translucency (from GDC slides)

Glass and Translucency (from GDC slides)

We then presented a sparse surfel-based approach where we use raytracing to pathtrace irradiance from surfels spawned from the camera.

Surfel-based Global Illumination (from GDC slides)

Surfel-based Global Illumination (from GDC slides)

We also covered ambient occlusion (AO), and how raytraced AO compares to screen-space AO.

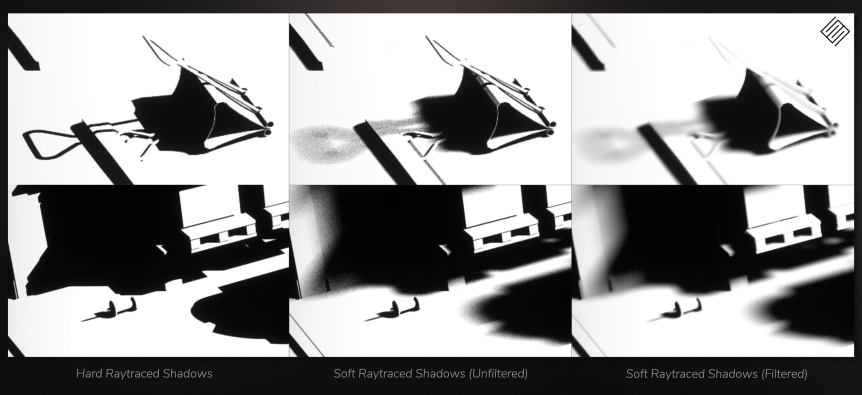

Inspired from Schied/NVIDIA’s Spatiotemporal Variance-Guided Filtering (SVGF), we also presented a super-optimized denoising filter specialized for soft shadows with varying penumbra.

Surfel-based Global Illumination (from GDC slides)

Surfel-based Global Illumination (from GDC slides)

Finally we talked about how we handle multiple GPUs (mGPU) and split the frame, relying on the first GPU to act as an arbiter that dispatches work to secondary GPUs in parallel fork-join style.

mGPU in PICA pica (from GDC slides)

mGPU in PICA pica (from GDC slides)

All-and-all, it was a lot of content for the time slot we had. In case you want more info, check out the presentation:

You can also download the slides: Powerpoint and PDF. You can also watch the presentation live here (starts around 21:30).Here are a few additional links that talk about DirectX Raytracing and Project PICA PICA:

- Microsoft: Announcing Microsoft DirectX Raytracing

- Ars Technica: DirectX Raytracing is the first step toward a graphics revolution

- PC World: Microsoft’s DirectX Raytracing paves the way for lifelike gaming, the graphics holy grail

- PC Gamer: What Microsoft’s DirectX Raytracing means for gaming

- Anandtech: Expanding DirectX 12: Microsoft Announces DirectX Raytracing

- Rock Paper Shotgun: EA’s Project Pica Pica leads new wave of photorealistic ray-tracing graphics demos at GDC 2018

- Eurogamer: EA just showed off Project Pica Pica, an adorable new tech demo

- Polygon: Nvidia’s latest tech will enable ‘cinematic-quality’ graphics — on unannounced GPUs

Additional Thoughts

As mentioned at GDC we’ve had the chance to be involved early with DXR, to experiment and provide feedback as the API evolved. Super glad to have been part of this initiative. We still have a lot to explore, and the future is exciting! Some additional thoughts:

Noise vs Ghosting vs Performance

DXR opens the door to an entirely new class of techniques that have never been achieved in games. With real-time raytracing it feels like the upcoming years will be about managing complex tradeoffs, such as noise, ghosting, quality vs performance. While you can add more samples to reduce noise (and improve convergence) during stochastic sampling, it decreases performance. Alternatively you can reuse samples from previous frames (via temporal filtering), but it can add ghosting. It feels like achieving the right balance here will be important. As DXR gets adopted in games this topic will generate a lot of good presentations at conferences.

Comparing Against Ground Truth

We also mentioned that we built our own pathtracer inside our framework. This pathtracer acts as reference implementation, which at any point we can toggle when working on a feature for our hybrid renderer. This allows us to rapidly compare results, and see how a feature looks against ground truth. Since a lot of code is shared between the reference and various hybrids techniques, no significant additional maintenance is required. At the end of the day, having a reference implementation will help you make the best decision in order to achieve the balance between quality and performance for your (hybrid) techniques.

If raytracing is new to you and building a reference ray/pathtracer is of interest, many books and online resources are available. Peter Shirley’s Ray Tracing in One Weekend is quite popular. You should check it out! 🙂

Specialized Denoising and Reconstruction

Also mentioned during the presentation, we built a denoising filter specialized for soft penumbra shadows. While one can use general denoising algorithms like SVGF on the whole image, building a denoising filter around a specific term will undeniably achieve greater quality and performance. This is true since you can really customize the filter around the constraints of that term. In the near future one can expect that significant time and energy will be spent on specialized denoisers, and custom reconstruction of stochastically sampled terms.

DXR Interop

As mentioned earlier we share a lot of code between raytracing, rasterization and compute. In the event where one wants to bake lightmaps inside their engine (see Sébastien Hillaire‘s talk on Real-Time Raytracing For Interactive Global Illumination Workflows in Frostbite), DXR is very appealing because you can evaluate your actual HLSL material shaders. No need for (limited) parameter conversion, which is often necessary when using an external lightmap baking tool.

This is awesome!

Wrapping-up

Even though the API is there and available to everyone, this is just the beginning. It’s an important tool going forward that will enable new techniques in games, and could end up pushing the industry to new heights. I’m looking forward to the new techniques that evolve from everyone having access to DXR, and what kind of rendering problems get solved. I also find it quite appealing for the research community to be able to try and solve problems closer to the realm of real-time raytracing, where researchers can implement their solutions using a raytracing API that everyone can use.

Because it’s unified, it should also be easy for you to pick up the API, experiment and integrate in your own engine. Again, one doesn’t need this API to do real-time raytracing, but it provides a really nice package and a common language that all DirectX 12 developers can talk around. It’s also a clear focus point for hardware makers to focus on optimization. Also compute hasn’t really changed in a while, so hopefully these improvements will drive improvements in compute and in the the pipelines as well. That being said, the API is obviously not perfect, and is still at the proposal stage. Microsoft is open to additional feedback and discussion. Try it out and send your feedback!

Can’t wait to see what you will do with DXR! 🙂